Kubernetes Network

Moving from physical networks using switches, routers, and ethernet cables to virtual networks using software-defined networks (SDN) and virtual interfaces involves a slight learning curve. Of course, the principles remain the same, but there are different specifications and best practices. Kubernetes has its own set of rules, and if you're dealing with containers and the cloud, it helps to understand how Kubernetes networking works.

The Kubernetes Network Model has a few general rules to keep in mind:

Every Pod gets its own IP address: There should be no need to create links between Pods and no need to map container ports to host ports.

NAT is not required: Pods on a node should be able to communicate with all Pods on all nodes without NAT.

Agents get all-access passes: Agents on a node (system daemons, Kubelet) can communicate with all the Pods in that node.

Shared namespaces: Containers within a Pod share a network namespace (IP and MAC address), so they can communicate with each other using the loopback address.

What Kubernetes networking solves

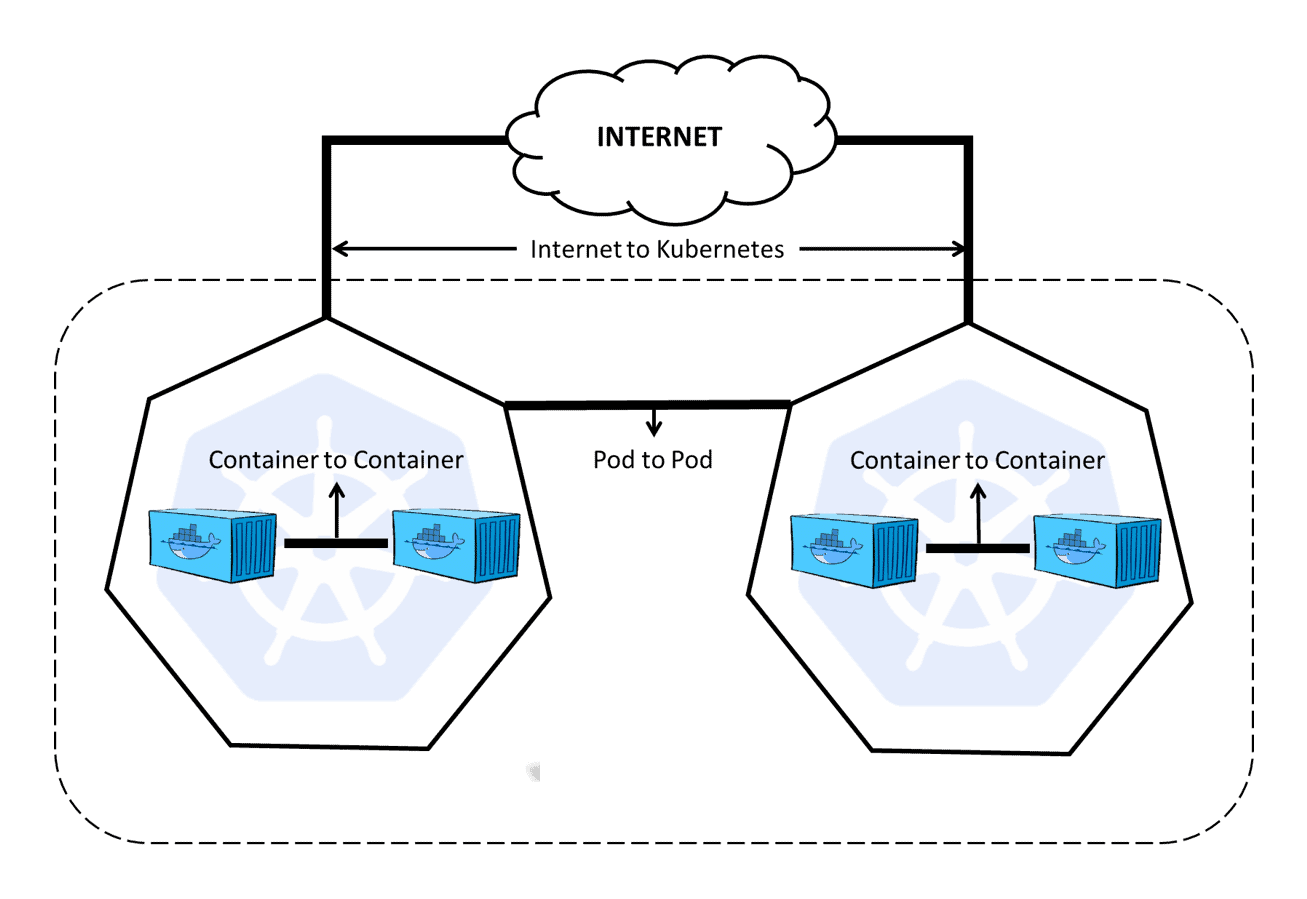

Kubernetes networking is designed to ensure that the different entity types within Kubernetes can communicate. The layout of a Kubernetes infrastructure has, by design, a lot of separation. Namespaces, containers, and Pods are meant to keep components distinct from one another, so a highly structured plan for communication is important.

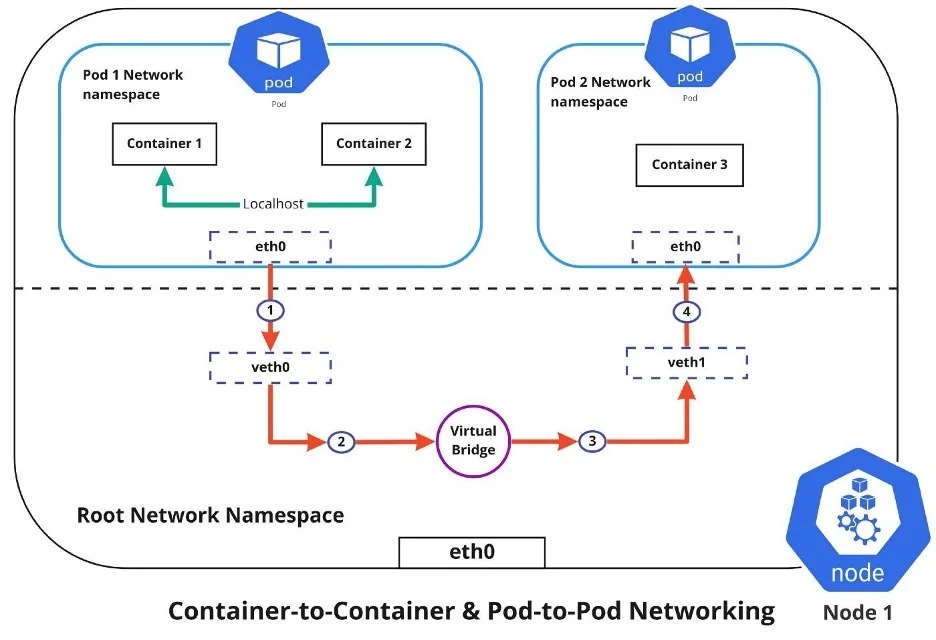

(Nived Velayudhan, CC BY-SA 4.0)

Container-to-container networking

Container-to-container networking happens through the Pod network namespace. Network namespaces allow you to have separate network interfaces and routing tables that are isolated from the rest of the system and operate independently. Every Pod has its own network namespace, and containers inside that Pod share the same IP address and ports. All communication between these containers happens through localhost, as they are all part of the same namespace. (Represented by the green line in the diagram.)

Pod-to-Pod networking

With Kubernetes, every node has a designated CIDR range of IPs for Pods. This ensures that every Pod receives a unique IP address that other Pods in the cluster can see. When a new Pod is created, the IP addresses never overlap. Unlike container-to-container networking, Pod-to-Pod communication happens using real IPs, whether you deploy the Pod on the same node or a different node in the cluster.

The diagram shows that for Pods to communicate with each other, the traffic must flow between the Pod network namespace and the Root network namespace. This is achieved by connecting both the Pod namespace and the Root namespace by a virtual ethernet device or a veth pair (veth0 to Pod namespace 1 and veth1 to Pod namespace 2 in the diagram). A virtual network bridge connects these virtual interfaces, allowing traffic to flow between them using the Address Resolution Protocol (ARP).

When data is sent from Pod 1 to Pod 2, the flow of events is:

Pod 1 traffic flows through eth0 to the Root network namespace's virtual interface veth0.

Traffic then goes through veth0 to the virtual bridge, which is connected to veth1.

Traffic goes through the virtual bridge to veth1.

Finally, traffic reaches the eth0 interface of Pod 2 through veth1.

Pod-to-Service networking

Pods are very dynamic. They may need to scale up or down based on demand. They may be created again in case of an application crash or a node failure. These events cause a Pod's IP address to change, which would make networking a challenge.

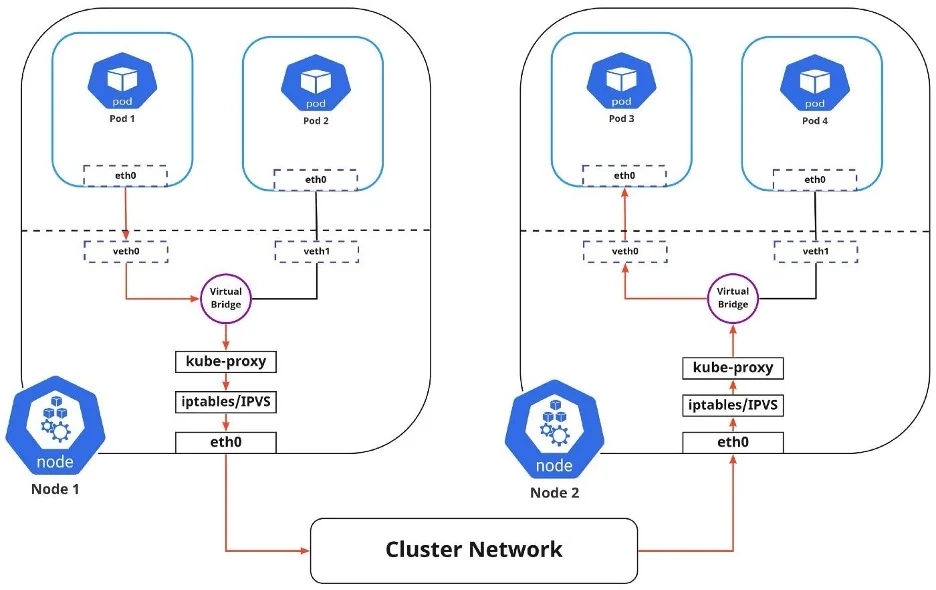

Credit to : (Nived Velayudhan, CC BY-SA 4.0)

Kubernetes solves this problem by using the Service function, which does the following:

Assigns a static virtual IP address in the frontend to connect any backend Pods associated with the Service.

Load-balances any traffic addressed to this virtual IP to the set of backend Pods.

Keeps track of the IP address of a Pod, such that even if the Pod IP address changes, the clients don't have any trouble connecting to the Pod because they only directly connect with the static virtual IP address of the Service itself.

The in-cluster load balancing occurs in two ways:

IPTABLES: In this mode, kube-proxy watches for changes in the API Server. For each new Service, it installs iptables rules, which capture traffic to the Service's clusterIP and port, then redirects traffic to the backend Pod for the Service. The Pod is selected randomly. This mode is reliable and has a lower system overhead because Linux Netfilter handles traffic without the need to switch between userspace and kernel space.

IPVS: IPVS is built on top of Netfilter and implements transport-layer load balancing. IPVS uses the Netfilter hook function, using the hash table as the underlying data structure, and works in the kernel space. This means that kube-proxy in IPVS mode redirects traffic with lower latency, higher throughput, and better performance than kube-proxy in iptables mode.

The diagram above shows the package flow from Pod 1 to Pod 3 through a Service to a different node (marked in red). The package traveling to the virtual bridge would have to use the default route (eth0) as ARP running on the bridge wouldn't understand the Service. Later, the packages have to be filtered by iptables, which uses the rules defined in the node by kube-proxy. Therefore the diagram shows the path as it is.

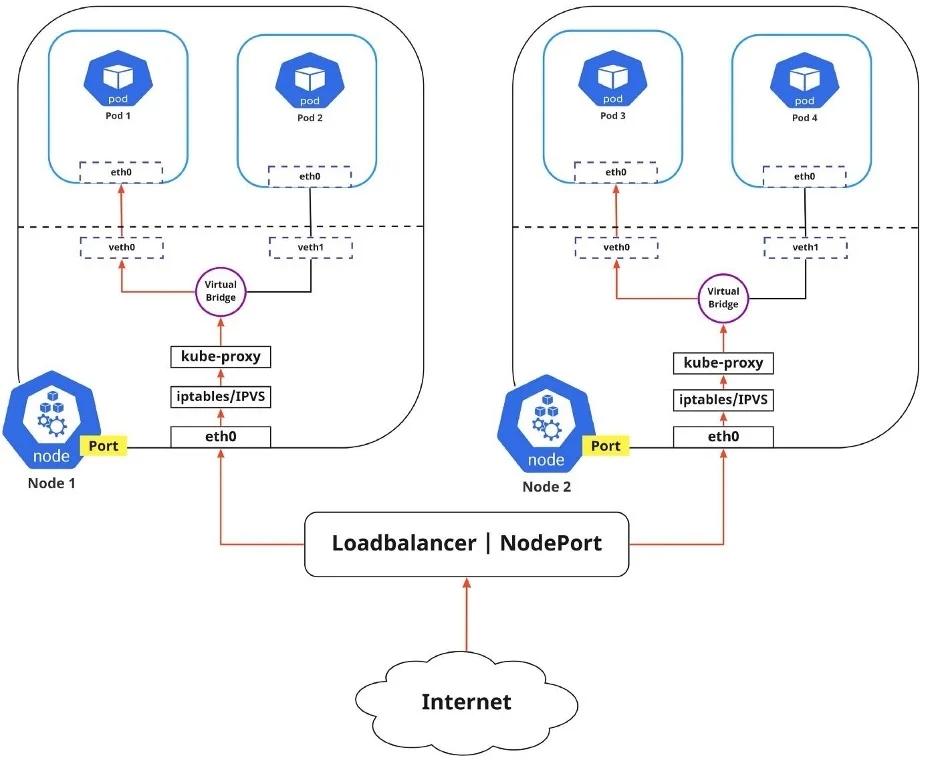

Internet-to-Service networking

So far, I have discussed how traffic is routed within a cluster. There's another side to Kubernetes networking, though, and that's exposing an application to the external network.

(Nived Velayudhan, CC BY-SA 4.0)

You can expose an application to an external network in two different ways.

Egress: Use this when you want to route traffic from your Kubernetes Service out to the Internet. In this case, iptables performs the source NAT, so the traffic appears to be coming from the node and not the Pod.

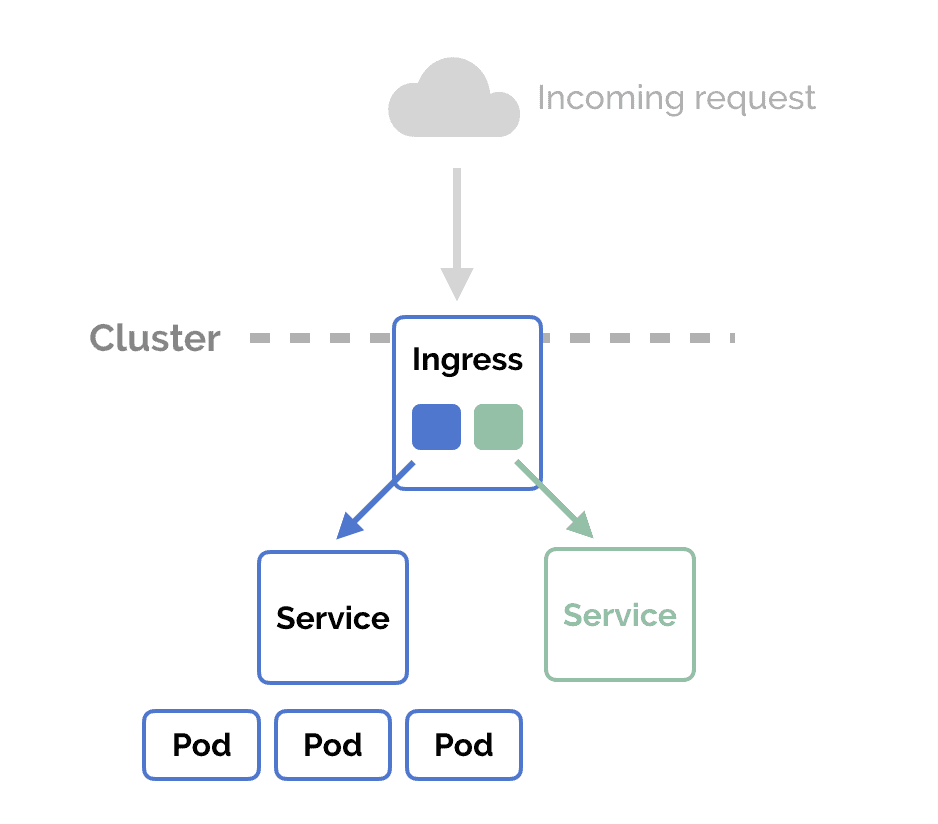

Ingress: This is the incoming traffic from the external world to Services. Ingress also allows and blocks particular communications with Services using rules for connections. Typically, there are two ingress solutions that function on different network stack regions: the service load balancer and the ingress controller.

Discovering Services

There are two ways Kubernetes discovers a Service:

Environment Variables: The kubelet service running on the node where your Pod runs is responsible for setting up environment variables for each active service in the format {SVCNAME}_SERVICE_HOST and {SVCNAME}_SERVICE_PORT. You must create the Service before the client Pods come into existence. Otherwise, those client Pods won't have their environment variables populated.

DNS: The DNS service is implemented as a Kubernetes service that maps to one or more DNS server Pods, which are scheduled just like any other Pod. Pods in the cluster are configured to use the DNS service, with a DNS search list that includes the Pod's own namespace and the cluster's default domain. A cluster-aware DNS server, such as CoreDNS, watches the Kubernetes API for new Services and creates a set of DNS records for each one. If DNS is enabled throughout your cluster, all Pods can automatically resolve Services by their DNS name. The Kubernetes DNS server is the only way to access ExternalName Services.

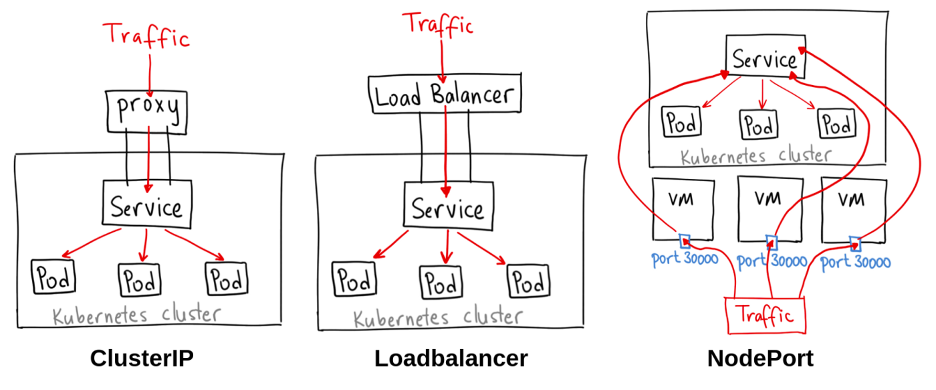

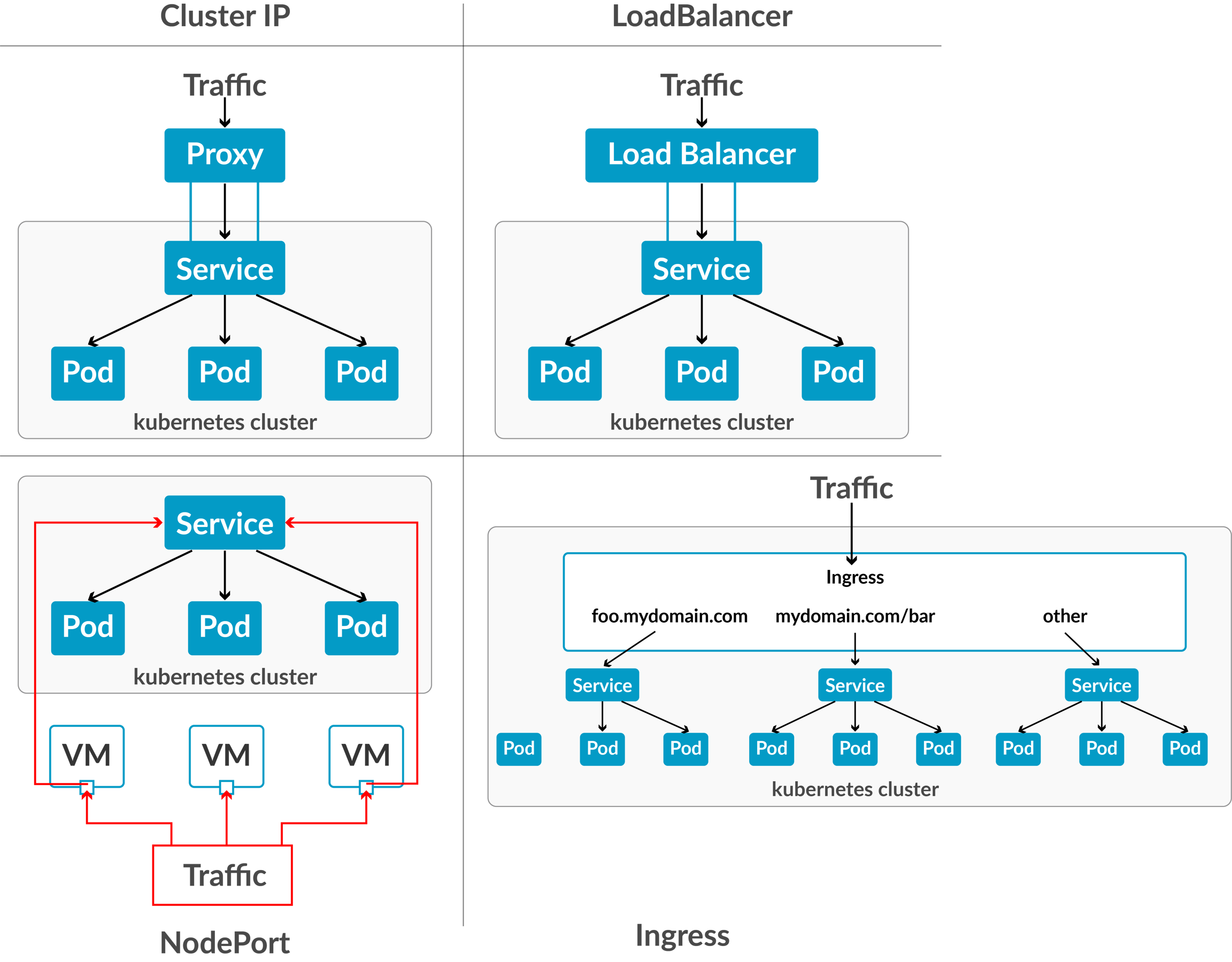

ServiceTypes for publishing Services:

Kubernetes Services provide you with a way of accessing a group of Pods, usually defined by using a label selector. This could be applications trying to access other applications within the cluster, or it could allow you to expose an application running in the cluster to the external world. Kubernetes ServiceTypes enable you to specify what kind of Service you want.

(Ahmet Alp Balkan, CC BY-SA 4.0)

The different ServiceTypes are:

ClusterIP: This is the default ServiceType. It makes the Service only reachable from within the cluster and allows applications within the cluster to communicate with each other. There is no external access.

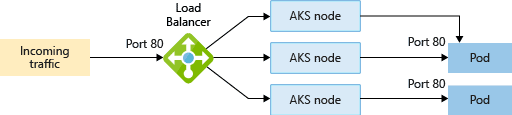

LoadBalancer: This ServiceType exposes the Services externally using the cloud provider's load balancer. Traffic from the external load balancer is directed to the backend Pods. The cloud provider decides how it is load-balanced.

NodePort: This allows the external traffic to access the Service by opening a specific port on all the nodes. Any traffic sent to this Port is then forwarded to the Service.

ExternalName: This type of Service maps a Service to a DNS name by using the contents of the externalName field by returning a CNAME record with its value. No proxying of any kind is set up.

Cluster Networking ^

In a standard Kubernetes deployment, there are several networking variations you should be aware of. Below are the most common networking situations to know.

Also read: Docker vs Virtual Machine to understand what is their difference.

Container-to-container Networking

The smallest object we can deploy in Kubernetes is the pod, however, within each pod, you may want to run multiple containers. A common use-case for this is a helper where a secondary container helps a primary container with tasks such as pushing and pulling data. Container to container communication within a K8s pod uses either the shared file system or the localhost network interface.

Pod-to-Pod Networking

Pod-to-pod networking can occur for pods within the same node or across nodes. Each of your nodes has a classless inter-domain routing (CIDR) block. This block is a defined set of unique IP addresses that are assigned to pods within that node. This ensures that each pod is provided with a unique IP regardless of which node it is in.

There are 2 types of communication.

Inter-node communication

Intra-node communication

Also Check: our previous blog on helm Kubernetes

Pod-to-Service Networking

Kubernetes is designed to allow pods to be replaced dynamically, as needed. This means that pod IP addresses are not durable unless special precautions are taken, such as for stateful applications. To address this issue and ensure that communication with and between pods is maintained, Kubernetes uses services.

Kubernetes services manage pod states and enable you to track pod IP addresses over time. These services abstract pod addresses by assigning a single virtual IP (a cluster IP) to a group of pod IPs. Then, any traffic sent to the virtual IP is distributed to the associated pods.

This service IP enables pods to be created and destroyed as needed without affecting overall communications. It also enables Kubernetes services to act as in-cluster load balancers, distributing traffic as needed among associated pods.

Internet-to-Service Networking

The final networking situation that is needed for most deployments is between the Internet and services. Whether you are using Kubernetes for internal or external applications, you generally need Internet connectivity. This connectivity enables users to access your services and distributed teams to collaborate.

When setting up external access, there are two techniques you need to use — egress and ingress. These are policies that you can set up with either whitelisting or blacklisting to control traffic into and out of your network.

Also Read: Our blog post on Kubernetes delete deployment. Click here

What are Services in Kubernetes? ^

Kubernetes Service provides the IP Address, a single DNS name, and a Load Balancer to a set of Pods. A Service identifies its member Pods with a selector. For a Pod to be a member of the Service, the Pod must have all of the labels specified in the selector. A label is an arbitrary key/value pair that is attached to an object. K8s Services are also a REST object and also an abstraction that defines a logical set of pods and a policy for accessing the pod set.

Services select Pods based on their labels. When a network request is made to the service, it selects all Pods in the cluster matching the service’s selector, chooses one of them, and forwards the network request to it. Let us look at the core attributes of any kind of service in Kubernetes:

Label selector that locates pods

ClusterIP IP address & assigned port number

Port definitions

Optional mapping of incoming ports to a targetPort

Check Out: Kubernetes Monitoring Tools. Click here

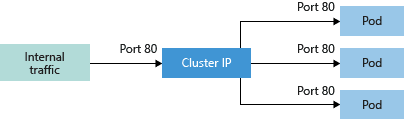

ClusterIP

ClusterIP is the default Service type in Kubernetes. In this Service, Kubernetes creates a stable IP Address that is accessible from all the nodes in the cluster. The scope of this service is confined within the cluster only. The main use case of this service is to connect our Frontend Pods to our Backend Pods as we don’t expose backend Pods to the outside world because of security reasons.

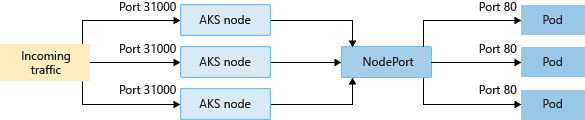

NodePort

NodePort exposes the Service on each Node’s IP at a static port (the NodePort). NodePort builds on top of ClusterIP to create a mapping from each Worker Node’s static IP on a specified (or Kubernetes has chosen) Port. We can contact the NodePort service, from outside the cluster, by requesting <Node IP>:<Nodeport>. The only main use case of NodePort Service is to expose our Pods to the outside world. Note: We can only expose the ports 30000-32767.

LoadBalancer

The LoadBalancer Service is a standard way for exposing our Nodes to the outside world or the internet. We have multiple Pods deployed on multiple Nodes, to access our application we can use any of the Public IP of any node and node port. But there are some problems in this scenario, like which Nodes IP we will provide to the clients and how will the traffic balance between the multiple nodes into the cluster. A simple solution for this will be LoadBalancer.

Also Read: Kubernetes Labels and Kubernetes Annotations are one of the main components which provide a way for adding additional metadata to our Kubernetes Objects.

Ingress Controller

Ingress Controller is an intelligent Load Balancer. Ingress is a high-level abstraction responsible for allowing simple host or URL based HTTP routing. It is always implemented using a third-party proxy. These implementations are nothing but Ingress Controller. It is a Layer-7 load balancer.

Also Read: Know everything about Ingress Controller.

DNS in Kubernetes ^

The Domain Name System (DNS) is the networking system in place that allows us to resolve human-friendly names to unique IP addresses. By default, most Kubernetes clusters automatically configure an internal DNS service to provide a lightweight mechanism for service discovery. Kube-DNS and CoreDNS are two established DNS solutions for defining DNS naming rules and resolving pod and service DNS to their corresponding cluster IPs. With DNS, Kubernetes services can be referenced by name that will correspond to any number of backend pods managed by the service.

A DNS Pod consists of three separate containers:

Kubedns: watches the Kubernetes master for changes in Services and Endpoints, and maintains in-memory lookup structures to serve DNS requests.

Dnsmasq: adds DNS caching to improve performance.

Sidecar: provides a single health check endpoint to perform health checks for dnsmasq and kubedns.

Lab 1 : Create ingress route to Nignx deployment

Lab 2 : Create ingress route to Wordpress deployment

Last updated